pytorch - Why tensorflow GPU memory usage decreasing when I increasing the batch size? - Stack Overflow

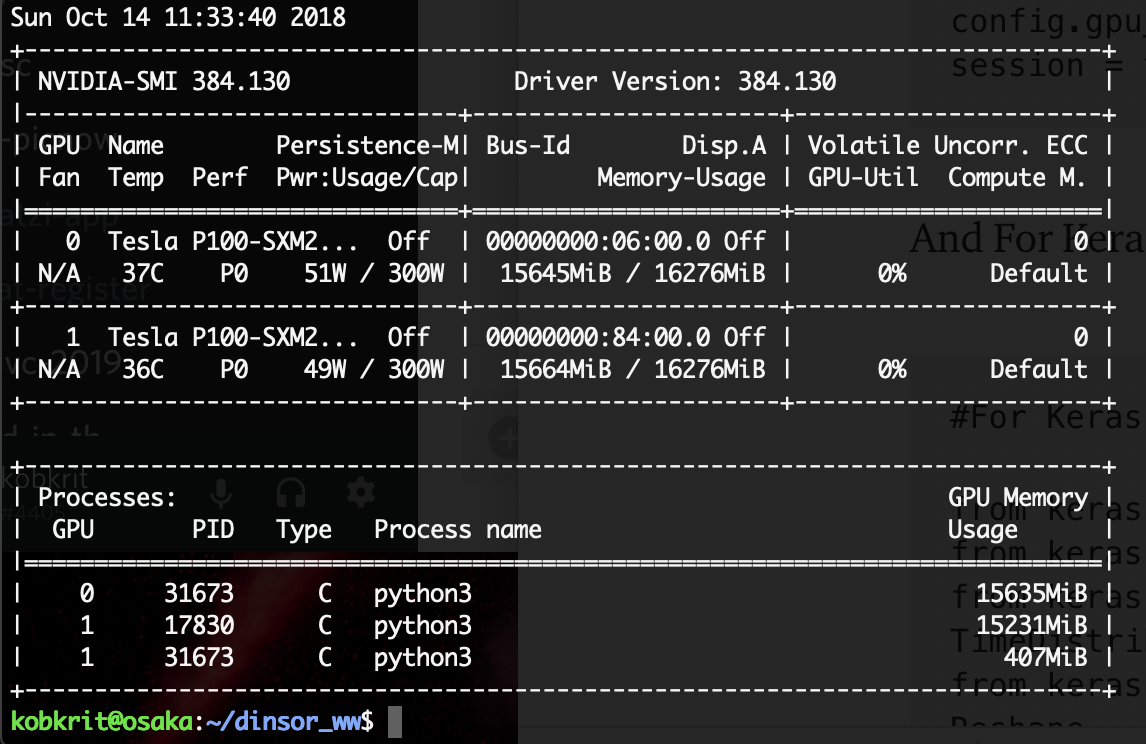

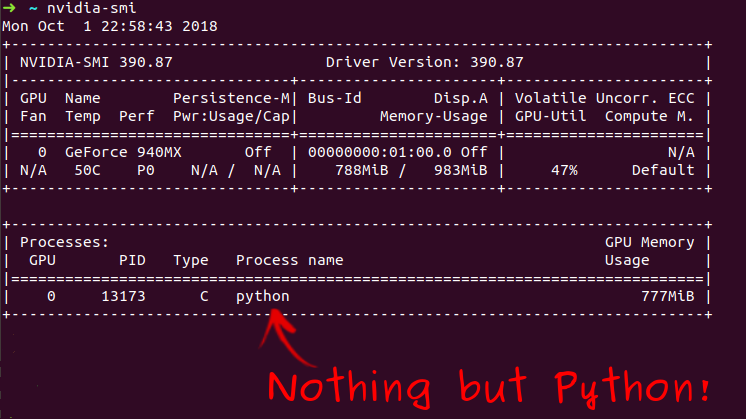

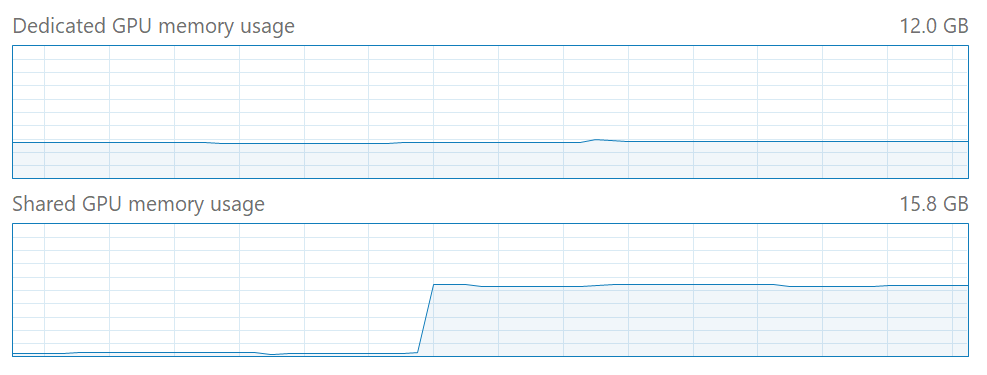

Force Full Usage of Dedicated VRAM instead of Shared Memory (RAM) · Issue #45 · microsoft/tensorflow-directml · GitHub

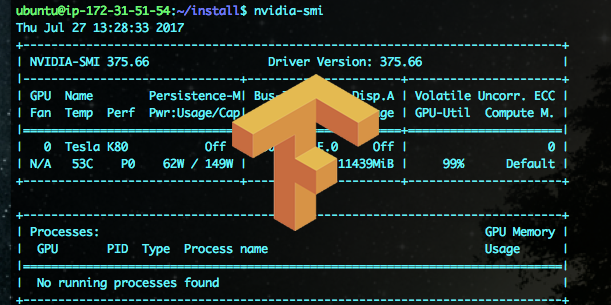

How to dedicate your laptop GPU to TensorFlow only, on Ubuntu 18.04. | by Manu NALEPA | Towards Data Science

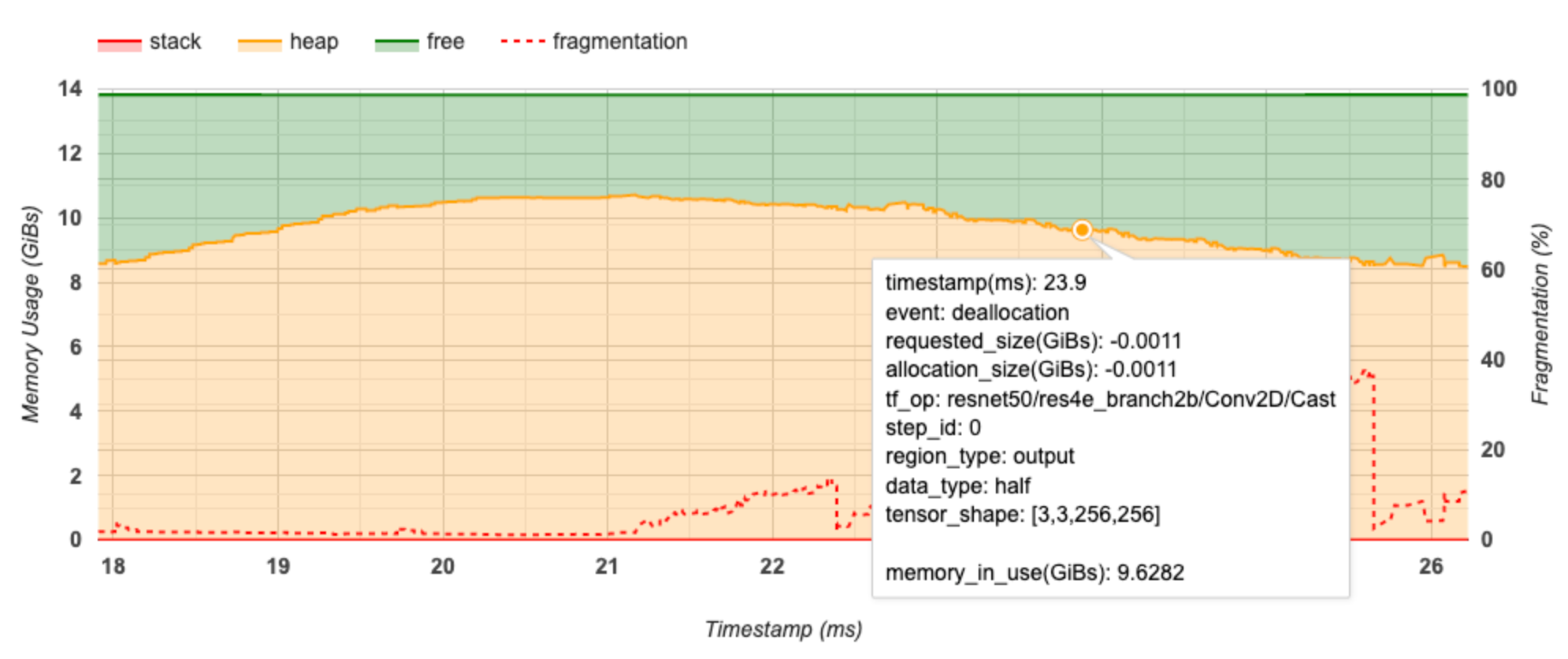

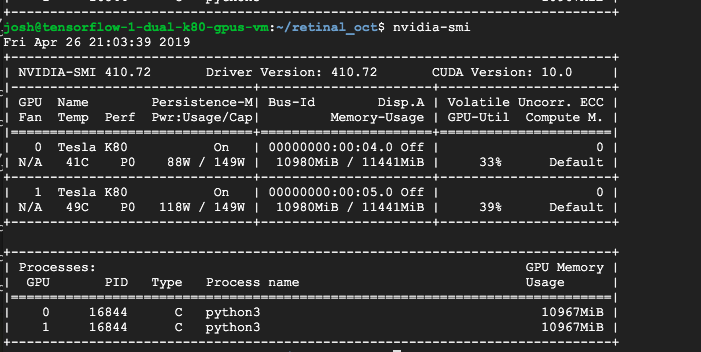

Memory Hygiene With TensorFlow During Model Training and Deployment for Inference | by Tanveer Khan | IBM Data Science in Practice | Medium

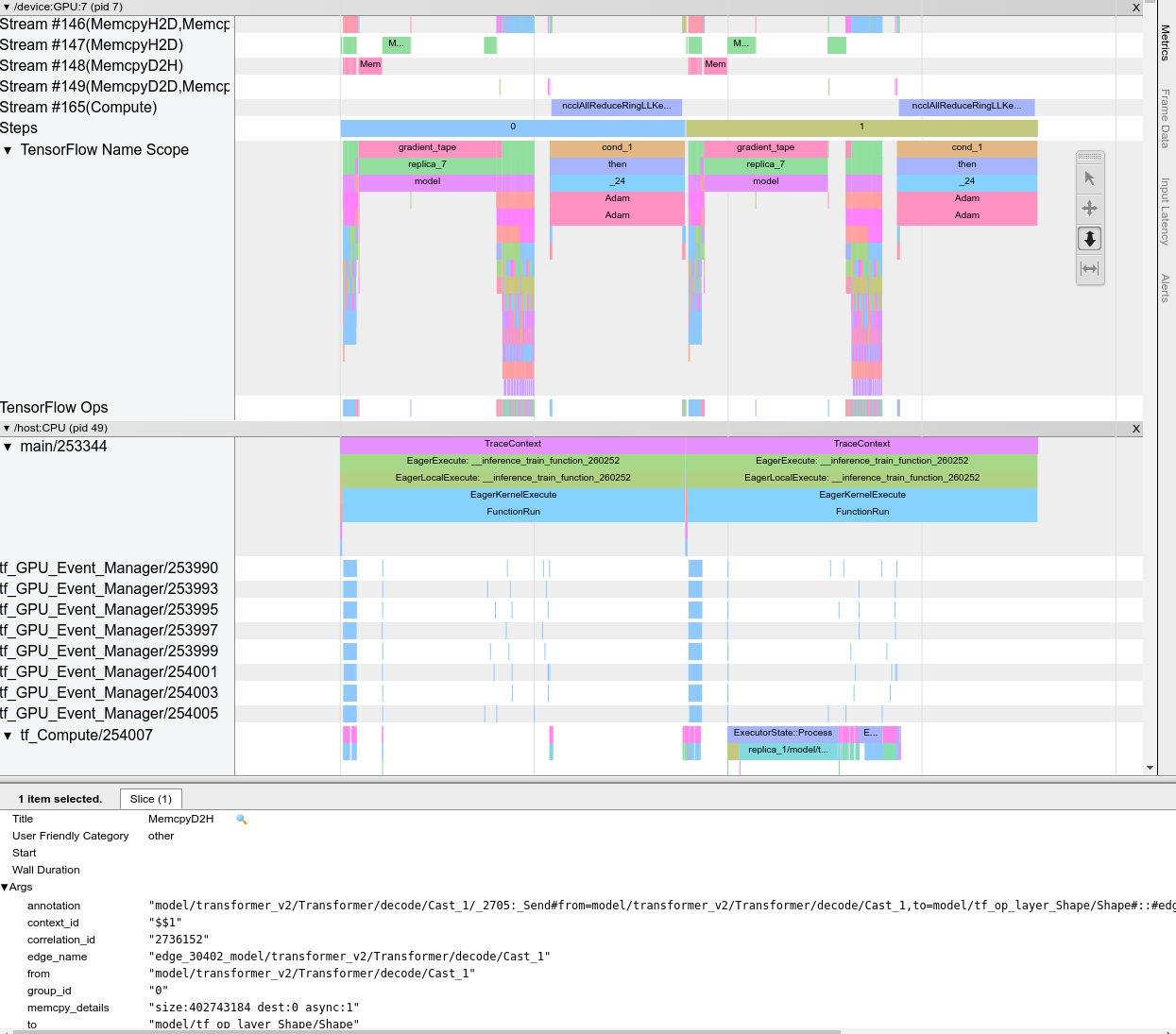

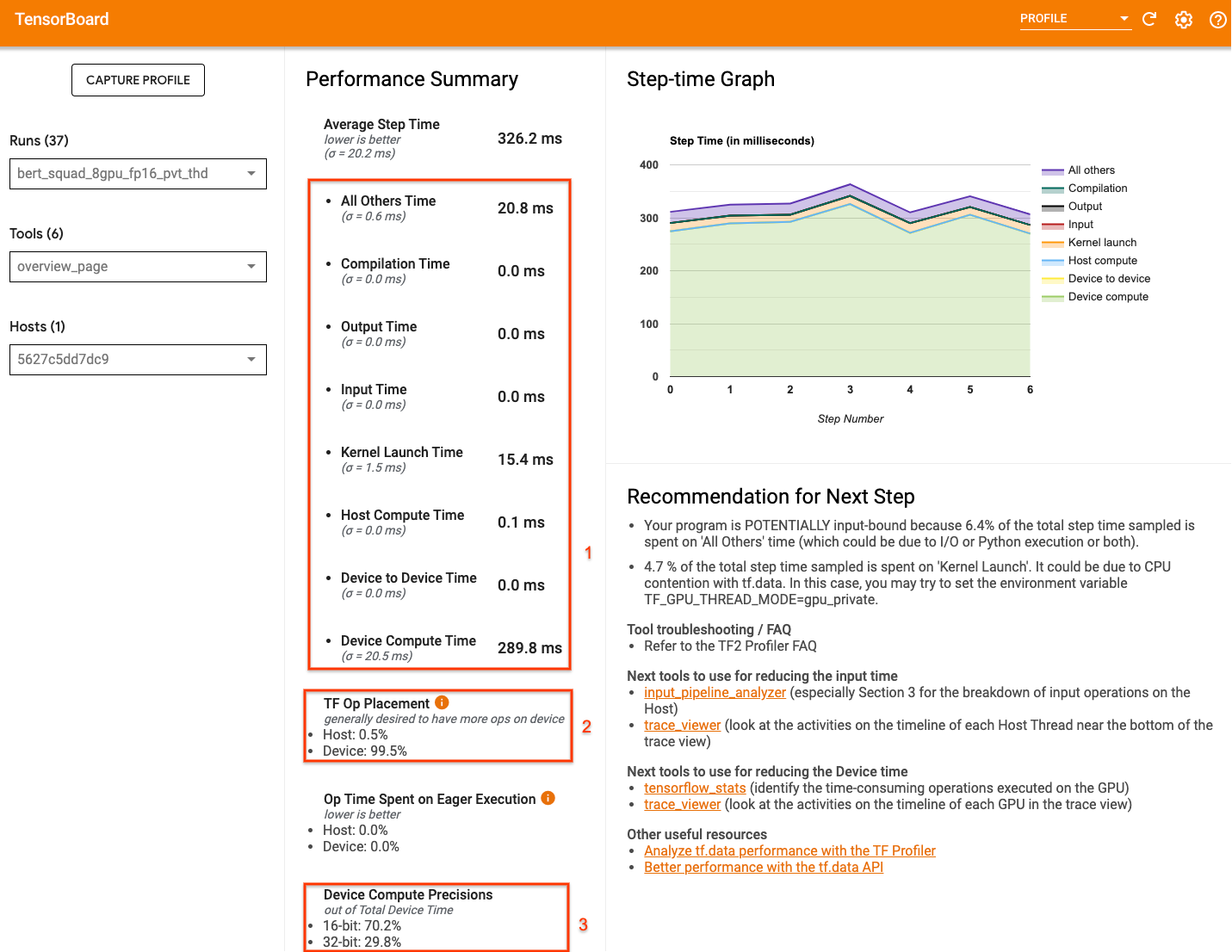

![PDF] Training Deeper Models by GPU Memory Optimization on TensorFlow | Semantic Scholar PDF] Training Deeper Models by GPU Memory Optimization on TensorFlow | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/497663d343870304b5ed1a2ebb997aaf09c4b529/4-Figure3-1.png)